The integration of Large Language Models (LLMs) into business operations is no longer a futuristic concept—it’s a present-day imperative. As organizations race to harness the power of AI, the deployment of models like DeepSeek on Hyper-Converged Infrastructure (HCI) emerges as a game-changer. This blog will explore how DeepSeek R1’s open-source approach, industry-specific applications, and synergy with HCI are redefining efficiency, scalability, and innovation.

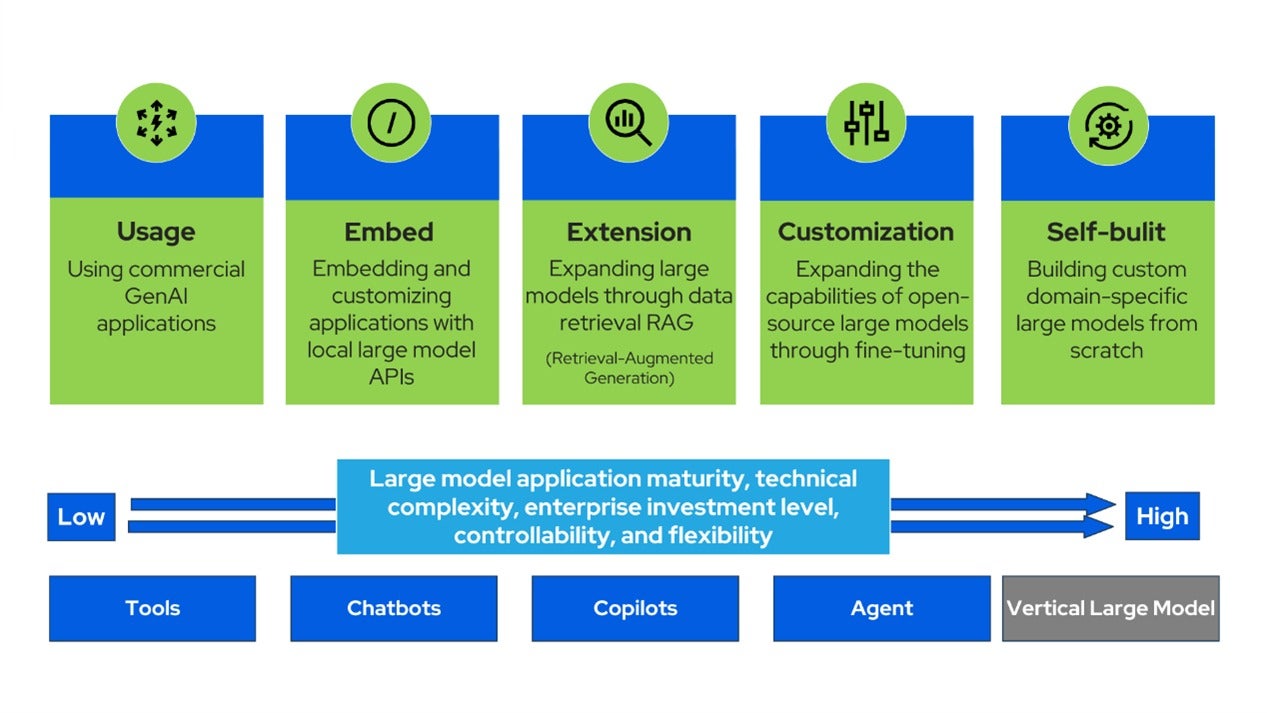

Diverse Paths to Applying LLMs

Before the advent of open-source models like DeepSeek R1, organizations typically applied LLMs through several general pathways, each with its own challenges and opportunities:

- Pre-Trained Models via APIs: Many businesses relied on proprietary LLMs offered by major tech companies through APIs. These models, such as GPT-3 or BERT, provided quick access to powerful AI capabilities but often came with limitations like high costs, lack of customization, and dependency on third-party infrastructure.

- Fine-Tuning Existing Models: Some organizations fine-tuned pre-trained models on their proprietary datasets to better align with specific tasks. While this approach improved relevance, it required significant computational resources and expertise, making it inaccessible to smaller players.

- Building Models from Scratch: A few enterprises with deep pockets and technical expertise attempted to build their own LLMs from scratch. This path offered maximum control but was prohibitively expensive and time-consuming, often taking years to develop and deploy.

- Hybrid Approaches: Combining pre-trained models with custom-built modules or rule-based systems was another common strategy. This allowed businesses to balance flexibility and cost but often resulted in complex, hard-to-maintain systems.

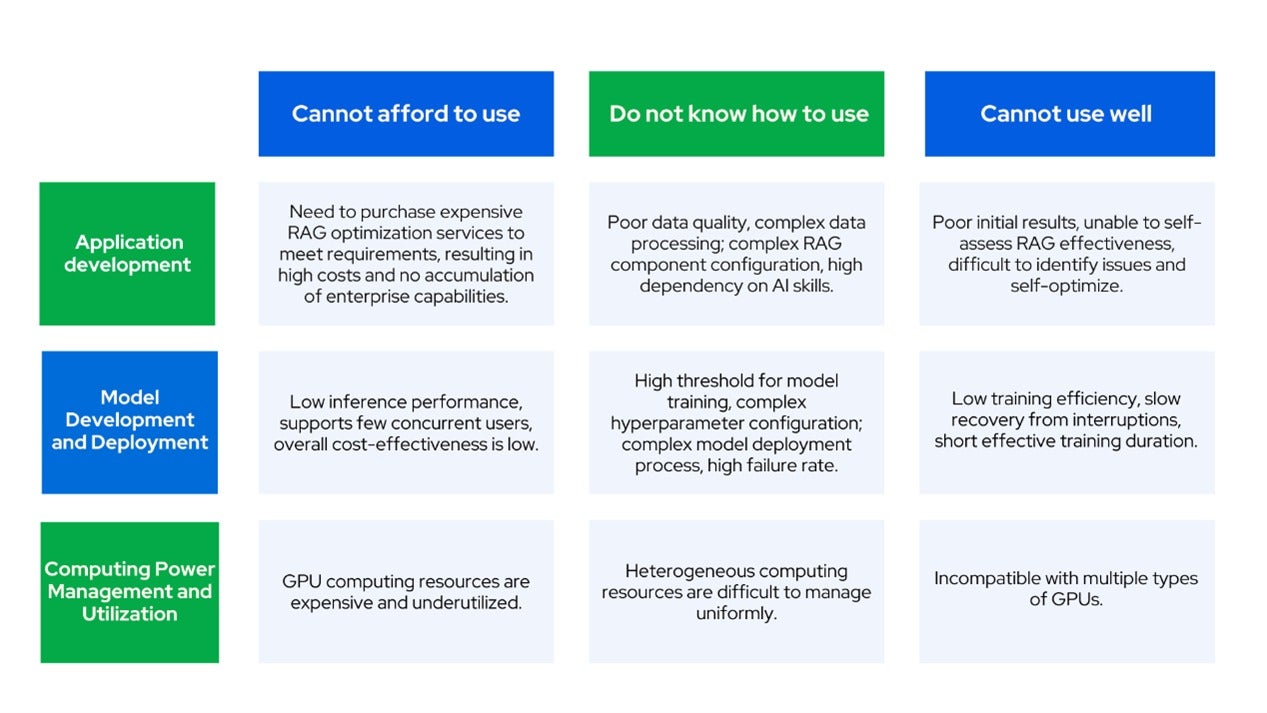

However, implementing large language models (LLMs) in enterprises often comes with significant challenges. These include the high computational resources required, the complexity of integrating with existing systems, and the need for specialized expertise to manage and fine-tune the models. Additionally, concerns about data privacy and security can pose barriers to adoption.

The Open-Source Revolution

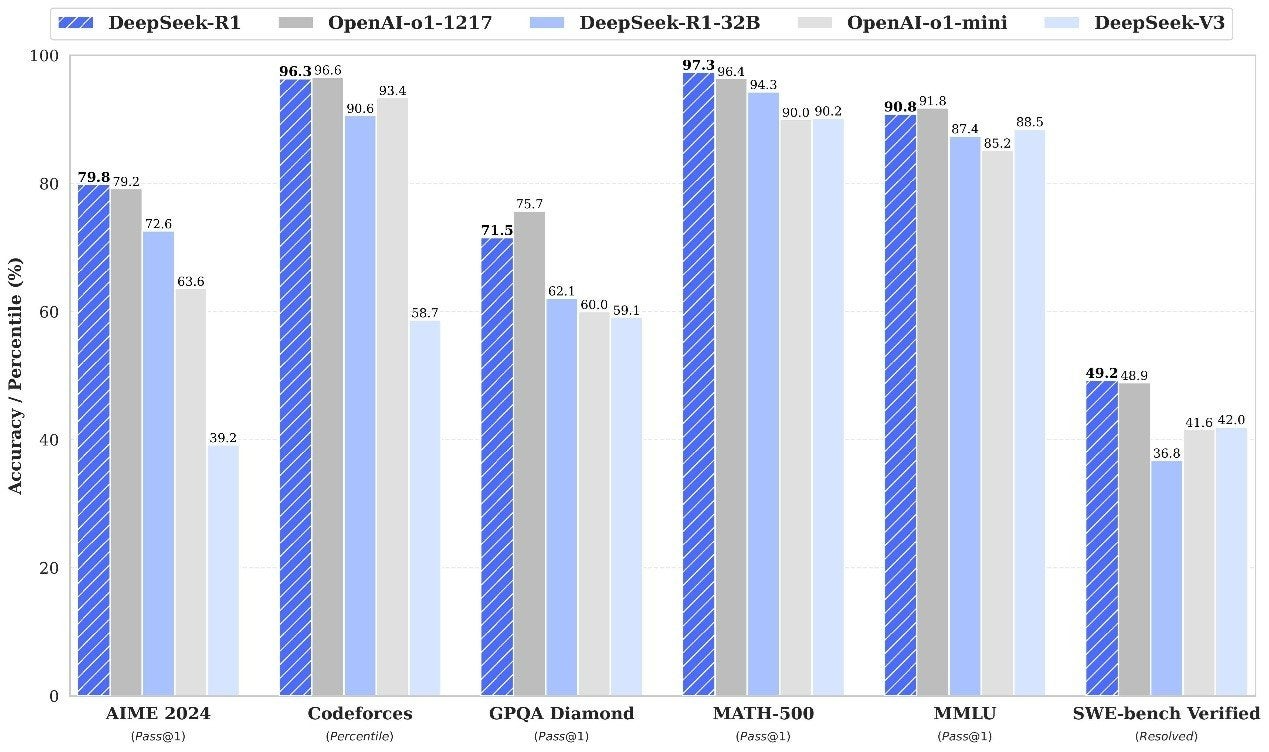

By open-sourcing DeepSeek R1, the barriers to advanced AI adoption crumble. On January 20, 2025, the latest model in the DeepSeek series, DeepSeek-R1, outperformed OpenAI-o1 in multiple tests and was open-sourced, attracting widespread attention. The original DeepSeek R1 model has 671 billion parameters. The official distillation produced DeepSeek-R1-Distill-Qwen-1.5B/7B/14B/32B and DeepSeek-R1-Distill-Llama-8B/70B. Among these, the 7B model already surpassed the then state-of-the-art open-source model QwQ-32B-Preview in the mathematics (AIME 2024) test. The 14B model's inference capabilities comprehensively exceeded QwQ-32B-Preview, and the 32B model could rival OpenAI O1-mini. The 1.5B model is already practical.

Source: DeepSeek

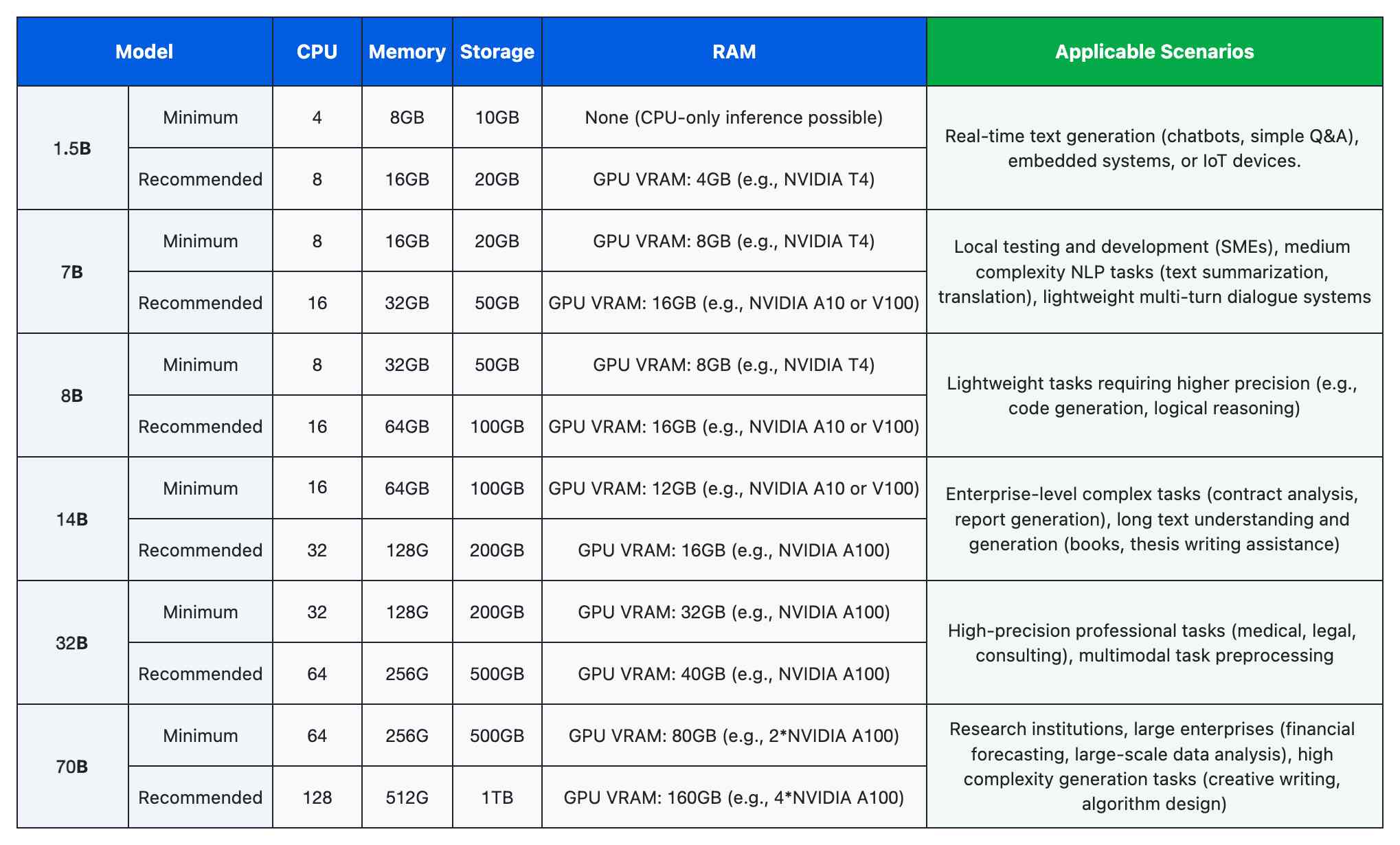

After distillation, the resource requirements of the models were significantly reduced. The 1.5B model can even run without a GPU, the 7B model is recommended for 8GB+ VRAM (RTX 3070/4060), the 14B model requires 16GB VRAM (RTX 4090), and the 32B model only needs 24GB VRAM (two RTX 3090s). DeepSeek's distillation technology has made model miniaturization popular, solving the cost dilemma of private deployment.

The open-source nature of DeepSeek allows organizations to customize and adapt the model to their specific needs without the constraints of proprietary software. Deploying DeepSeek on HCI will also simplify deployment and management, enhance performance and efficiency, strengthen security and reliability, and reduce costs.

Industry-Specific Scenarios: DeepSeek R1 in Action

The DeepSeek R1 model possesses powerful deep learning capabilities and is widely applicable across various domains. The following are some key application areas:

Office Automation

DeepSeek can be integrated into office software to enable intelligent text processing, automatic report generation, and more. This improves work efficiency, reduces manual tasks, and enhances the intelligence and convenience of office operations.

Natural Language Processing

DeepSeek performs exceptionally well in tasks such as text generation, question-answering systems, machine translation, and text summarization. It can generate high-quality natural language texts such as articles, reports, and stories; provide users with precise answers, enabling intelligent customer service and virtual assistants; and facilitate language translation and summarization to help users quickly obtain key information.

Software Development

DeepSeek can assist in code generation, code completion, and code optimization, significantly improving development efficiency and code quality. It helps developers quickly implement features, reduce coding time and workload, and analyze and optimize existing code to enhance readability and performance.

Education

DeepSeek can be used to develop intelligent educational assistance tools, providing personalized learning recommendations and answers based on students' learning progress and challenges. It helps students better understand knowledge, improves learning outcomes, and can be integrated into online education platforms for real-time academic support.

Enterprise On-Premises Deployment

Typical on-premises applications of DeepSeek include:

- Building enterprise-level intelligent knowledge bases

- Developing enterprise-grade office assistants

DeepSeek Basic Model Performance

Basic Model Performance

| Model Type | Benchmark Performance | Applicable Scenarios |

|---|---|---|

| 1.5B | Below QwQ-32B-Preview | Edge deployment, pure CPU deployment |

| 7B, 8B | On par with QwQ-32B-Preview | Personal development and testing |

| 14B | Slightly above QwQ-32B-Preview, on par with o1-mini | Internal enterprise pilot |

| 32B | Exceeds QwQ-32B-Preview, exceeds o1-mini | Enterprise-level production application |

| 70B | Exceeds QwQ-32B-Preview, exceeds o1-mini | Vertical domain commercial production application |

| 671B | Exceeds OpenAI-o1-1217, exceeds o1-mini | Top vertical domain application, large-scale inference service |

Model Configuration Recommendations (Based on ollma Deployment Plan, Using INT4 Quantized Model)

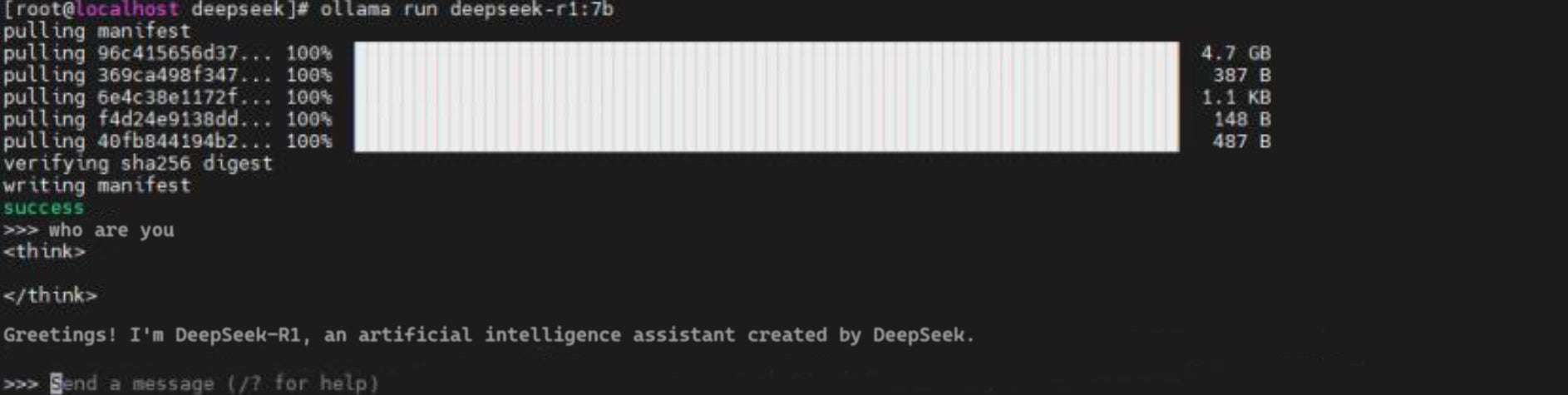

The 7B model, with its 7 billion parameters, is designed to balance performance and resource requirements, making it suitable for deployment on Sangfor HCI systems. Below is an example of a successful deployment screenshot and the parameter configuration details:

Why DeepSeek R1 on HCI? The Ultimate Synergy

- Simplified Deployment and Management

- Plug-and-play: The Sangfor HCI platform provides a pre-integrated virtualization environment, allowing for quick deployment of DeepSeek without complex configurations.

- Unified management: Through the Sangfor HCI management interface, users can centrally manage DeepSeek's virtual machines, storage, and network resources, simplifying IT operations.

- Enhanced Performance and Efficiency

- High-performance computing: The distributed architecture of the Sangfor HCI platform provides high-performance computing, storage, and GPU resources to meet DeepSeek's computational needs.

- Elastic resource scaling: The Sangfor HCI platform supports flexible resource scaling based on DeepSeek's business demands, preventing resource wastage.

- Data localization: Storing DeepSeek's data locally minimizes network latency and improves data processing efficiency.

- Improved Security and Reliability

- Data security: The Sangfor HCI platform provides security mechanisms such as data encryption and access control to safeguard DeepSeek's data.

- High availability: The Sangfor HCI platform supports high-availability architectures, including dual-machine hot standby and fault migration, ensuring business continuity.

- Disaster recovery: The platform supports data backup and recovery, enabling quick restoration of DeepSeek's business data.

- Cost Reduction

- Hardware cost savings: The software-defined architecture of the Sangfor HCI platform reduces hardware acquisition costs.

- Lower IT operations costs: The simplified operations and maintenance of the Sangfor HCI platform reduce administrative overhead.

- Optimized resource utilization: The Sangfor HCI platform maximizes resource utilization, minimizing waste.

Conclusion: The Future is Open, Scalable, and Intelligent

The deployment of DeepSeek R1 on HCI represents more than a technical milestone—it’s a paradigm shift. By combining open-source flexibility with HCI’s agility, businesses can deploy AI solutions that are as dynamic as their challenges. Whether revolutionizing patient care, redefining retail experiences, or optimizing industrial operations, DeepSeek R1 on HCI empowers organizations to turn ambition into action.

As the AI landscape evolves, one truth remains clear: The winners of tomorrow will be those who harness the synergy of cutting-edge models and resilient infrastructure today. With DeepSeek R1 and Sangfor HCI, that future is within reach.

Ready to transform your industry? Explore DeepSeek R1 on HCI—where innovation meets scalability.