Artificial Intelligence (AI) platforms have become a major trend across the globe with many companies rushing to join the AI race and release the latest, most efficient, and most reliable version possible. This has led to a staggering amount of AI news to be cycled through each week and an ever-increasing number of AI trends to follow. In this blog article, we take a look at some of the top AI insights to make headlines - from Grok 3 and OpenAI to Mistral AI and DeepSeek. We also take a look at some of the advantages of using AI in cybersecurity and some of the risks the technology presents. For now, let’s get a better understanding of the top AI news circulating.

The Latest AI News

AI trends are constantly rocking the boat and companies are now clamoring to be the first to innovate and improve the technology ahead of the competition – and it’s not difficult to see why. Establishing your AI platform in such a volatile and emerging industry can either make or break your reputation on a global scale. To be the pioneers of such an evolved technology, companies need to always stay 2 steps ahead and be ready to make the next move. Now, let’s look at the main AI platforms stealing the limelight today.

Grok 3 Debut

On the 17th of February, Elon Musk debuted Grok 3 – the latest model from his AI start-up, xAI. Musk revealed the latest AI model during a live-streamed event in which he also claims that Grok 3 performs better across math, science, and coding benchmarks than most of its competition. The new model uses a smart search engine called DeepSearch - which is described as “a reasoning-based chatbot capable of articulating its thought process when responding to user queries.”

Musk further described the chatbot as being “in a league of its own," and added that the model outperforms its predecessor, Grok-2. The chatbot is capable of advanced reasoning, text-to-video conversion, and self-correction mechanisms. Grok 3 was immediately rolled out to Premium+ subscribers on X and will have a new subscription tier called SuperGrok for users accessing the chatbot via its mobile app and Grok.com website.

At its launch, Musk labeled Grok 3 as the “smartest AI on Earth," and noted that the mission of xAI and Grok is to understand the universe. “We want to answer the biggest questions: Where are the aliens? What’s the meaning of life? How does the universe end? To do that, we must rigorously pursue truth,” Musk concluded. The chatbot is powered by the Colossus supercomputer, which uses 100,000 Nvidia H100 GPUs to deliver 200 million GPU hours for training. This massive computational power allows Grok 3 to process vast datasets efficiently and set a new standard in AI performance.

Mistral AI Releases Le Chat

Across the world, the French company, Mistral AI, also made waves recently with the release of its AI assistant chatbot, Le Chat. The highly popular platform claimed to be the world’s fastest chatbot – delivering 1,000 words per second. Le Chat can reason, reflect, and respond at impressive speeds and aims to “transform complex tasks into achievable outcomes with AI that speak every professional language.” President Emmanuel Macron has also encouraged his citizens to download the app several times. According to the company, Le Chat is 10 times faster than popular models such as ChatGPT 4o, Sonnet 3.5, and DeepSeek R1 – ultimately making it the world’s fastest AI assistant.

DeepSeek

Another massive disruption in AI news was the release of the wildly popular DeepSeek AI assistant. The Chinese platform climbed charts within days of its release to become Apple App Store’s most downloaded app – almost instantly overtaking OpenAI’s ChatGPT mobile app. The DeepSeek R1 platform caused a massive upset in the US stock market where several major US-based AI companies dropped in value due to the sudden rise in popularity of the Chinese AI.

The platform itself also gained further attention due to how cost-efficiently it was to create – reportedly only costing less than US$ 6 million to train. DeepSeek R1 also uses less memory than its competitors - further reducing the cost of performing tasks for users. DeepSeek can provide solutions across several subjects – including complex problems in mathematics and coding. DeepSeek R1 is a ‘reasoning model’ – like OpenAI’s o1 model – and produces responses incrementally - simulating how a human would reason through problems or ideas.

OpenAI’s ChatGPT

OpenAI is possibly the biggest name in AI technology with the pioneering release of its ChatGPT model in late 2022. The platform was built to interact conversationally and answer questions from users. The company's latest update to ChatGPT 4o is being touted as the "best search product on the web." The GPT-4o was released in May of 2024 and immediately impressed users with its ability to handle text, audio, and images as inputs and outputs.

Now, in AI news, OpenAI has announced a new content policy change that provides more freedom to its AI models to discuss sensitive issues and embrace topics that it was once trained to avoid. The Model Spec update – which is a guideline for AI training - was announced to include a new guiding principle: Do not lie, either by making untrue statements or by omitting important context. According to the company, this update reinforces its commitment to customizability, transparency, and intellectual freedom to explore, debate, and create with AI without arbitrary restrictions—while ensuring that guardrails remain in place to reduce the risk of real harm.

“This principle may be controversial, as it means the assistant may remain neutral on topics some consider morally wrong or offensive,” OpenAI said in the new section of the spec. “However, the goal of an AI assistant is to assist humanity, not to shape it.” The ChatGPT model was created in 2015 initially by Sam Altman and Elon Musk but after a falling out, Musk left the company. However, Musk did offer to buy OpenAI later on in a takeover bid. Altman, however, responded that the company was "not for sale."

Google’s Gemini AI

On the other hand, Google’s Gemini AI platform has been making its own strides since its release in 2023. The company spent the start of 2025 adding Gemini 2.0 Flash to its latest AI model, Gemini 2.0 – allowing it to deliver faster responses, provide a stronger performance, and ensure better brainstorming, learning, and writing.

On the 18th of February 2025, the company announced the release of Deep Research on the Gemini mobile app for all Gemini Advanced users. This allows Android and iOS users to generate comprehensive and simplified reports on several research topics – saving hours of research time. The next day, Google launched its AI co-scientist, a new AI system built on Gemini 2.0 that is designed to “aid scientists in creating novel hypotheses and research plans.” According to Google, researchers can now specify a research goal using natural language and the AI co-scientist will propose testable hypotheses - along with a summary of relevant published literature and a possible experimental approach.

The Samsung Galaxy S25 also announced that it would be the first family of phones to include Google's Project Astra. This allows the phone to analyze the world around it through its camera and provide personalized answers to questions that chatbots can't replicate.

Microsoft’s Copilot

Microsoft also has its Copilot AI chatbot to keep in the race. Advertised as “your everyday AI companion,” Copilot was released in 2023 as well to replace the virtual assistant Cortana. The chatbot is available as a website, an app, and as a sidebar in the Edge web browser – it also comes built-in to Windows and Microsoft 365 apps, including Word, Excel, Outlook, and PowerPoint.

Copilot is driven by the multimodal large language model GPT-4 that was developed by ChatGPT creator OpenAI. In early 2025, Copilot made ChatGPT-o1 free for all Copilot users – presenting it as a Think Deeper button that offers improved reasoning and logic abilities for broader and more complex queries. While users might opt for ChatGPT since it was based on the GPT-4 model, Copilot remains the most reliable choice for optimizing productivity and workflow.

Anthropic’s Claude AI

Anthropic created its Claude AI platform in 2023 as well to assist users in solving problems, automating tasks, and even creating content. The model was named after Claude Shannon, the "father of information theory," and prides itself on understanding and processing language intelligently. Claude 3.5 Sonnet is the latest and most advanced version of Claude AI – being designed for tasks requiring complex reasoning, coding, and data interpretation.

On the 14th of February, Anthropic signed a Memorandum of Understanding with the UK’s Department for Science, Innovation and Technology (DSIT) to develop AI technologies that enhance public services. The collaboration will focus on using Claude to enhance how people in the UK access and interact with government information and services online – further establishing best practices for the responsible deployment of frontier AI capabilities in the public sector.

While each of these AI giants has merits and essential capabilities, it’s important to note that every technology has inherent risks as well – especially when it comes to securing our digital space. Let’s take a closer look at some of those unique benefits and risks from the perspective of cybersecurity.

Advantages and Dangers of AI for Cyber Security

AI has always been flaunted as an advanced technology that will elevate the way we live our lives; however, it would be irresponsible to ignore the risks of using AI. While a large chunk of criticism for AI stems from generative AI being used to replace artists or writers, AI in itself is not necessarily bad in all its forms. For decades now, several apps have made use of AI technology to simplify processes and automate tasks – foremost in the cybersecurity sector.

Cybersecurity is a field that relies on accuracy, speed, and reliability. As humans, we are prone to making mistakes and represent a weak link when it comes to robust cybersecurity. However, with AI, cybersecurity measures are automatic, resilient, and trusted. These are some of the benefits of AI in cybersecurity:

- Providing immediate detection of malware and cyber threats. AI can be used to elevate threat hunting and ensure immediate alerts are sent out once a specific cyber-attack pattern is identified.

- Automating tasks within the cybersecurity framework. These might be tedious for humans; however, AI is dependable and resilient – allowing it to automate simple and repetitive tasks that ensure a secure front.

- Consistent endpoint protection. AI-based cybersecurity allows your team to protect vulnerable endpoints from malware and suspicious files.

- Consistent monitoring across the network. AI-enabled cybersecurity platforms allow you to monitor your entire network to detect and mitigate cyber threats in real-time.

- Providing cybersecurity testing. AI can be used to create scripts that might test your company’s cybersecurity measures – allowing you to improve on weaker areas.

- Curating updated threat intelligence. Cyber threats are constantly evolving and expanding across the world. AI cybersecurity allows you to constantly stay up to date with the latest trends and AI news as it happens.

While AI can be used to enforce better cybersecurity, it can also adversely be used to spread malware and orchestrate cyber-attacks. The accessibility and natural language capabilities of AI platforms also allow novices to carry out cyber-attacks without any prior knowledge or skills. This opens up a whole new attack surface where anyone with access to AI can lead a malicious campaign. These are some of the ways AI can be used to hinder cybersecurity:

- Phishing Emails: Phishing is one of the most common types of malware and AI has made it so much easier to generate automatic and credible-looking phishing emails and content.

- Malware Code Generation: AI can and has been used to generate malware and orchestrate cyber-attacks on companies and private networks.

- Botnet Attacks: AI can help hackers during targeted botnet attacks - during which a collection of devices that are all connected to the internet are infiltrated and hijacked by a hacker.

- Jailbreaking: This is the manipulation of AI models to generate uncensored or unrestricted content. Threat actors can use this method to evolve their hacking techniques and skills to commit worse crimes.

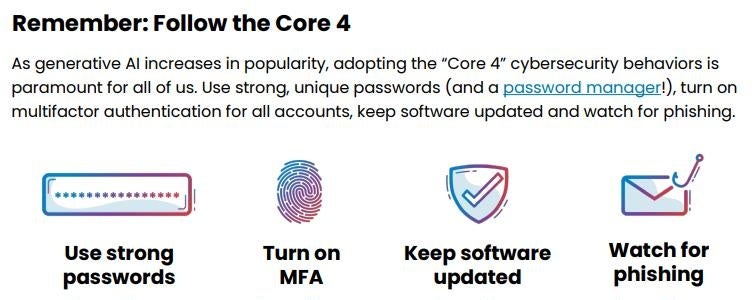

While AI can be used to facilitate several cybercrimes, it’s also crucial to note that these companies are doing their best to set restrictions and secure their AI platforms to prevent misuse. However, there are ways that users participate and create a culture of using AI safely.

How to Use AI Safely

AI is a growing trend and doesn’t seem to be going anywhere any time soon. So, it’s your responsibility as a user to ensure that you use AI correctly and safely. These are some tips from CISA that you can follow to ensure that your AI experience remains secure, reliable, and correct:

- Be mindful of your inputs: Remember that AI platforms are learning based on the inputs that you provide. This means that any confidential or sensitive information you place into a chatbot is not entirely private. This is especially crucial for businesses where your company data can be regurgitated in an answer for someone else.

- Ensure your privacy online: AI models can also scrape data from the web – which means they might use your information to train and submit answers as well. Pay attention to privacy settings on apps and websites.

- Be extra wary of social engineering attacks: As we’ve mentioned before, AI can be used by hackers to create deepfakes, generate phishing scams, and create malware. Stay vigilant and try to avoid any suspicious interactions online.

- Do not trust AI as a credible source: AI platforms are prone to hallucinations and can often generate completely false results. Do not use any AI platform to generate credible information or for any form of research.

- Be wary of what you share online: AI images and deepfakes are often found scattered around the internet and many people might use these images to provoke animosity, stir tensions, and generate interactions online. It is your responsibility to verify what you see and repost to prevent the spread of misinformation.

Sourced from CISA

These AI insights into the latest trends and headlines need to serve as a reminder that while we might aim to elevate technology further each day, it is also our responsibility to do so without compromising safety and security.

Conclusion

AI platforms across the globe are now pushing to be the best and most popular and while innovation should never be shunned in technology, it must come with a sense of shared responsibility. The AI revolution has created a fast-paced industry hellbent on being the first to innovate and excel – however, we must all do our part to create with integrity and understanding of the evolving and often hostile industry we aim to lead.